Introduction

When I was attempting to show how technology innovation accelerated during the 20th century, I used to mention that my mother was born before the Wright Brothers flew the first airplane and died after Neil Armstrong walked on the moon. This normally got my listeners’ attention – it was a remarkably swift path, particularly when one considers how long it took to get to the Wright Brothers.

I write that "I used to" mention this because at some point my wife, Barbara, said that she thought it was even more remarkable that we were both born before the birth of the industry in which we have spent our entire professional lives. On Valentine’s Day, 1946, the Department of the Army and the University of Pennsylvania Moore School of Engineering announced the completion of Electronic Numerical Integrator and Computer (ENIAC), launching the age of the computer.

Since that date, we have gone from a 27-ton, 1,800 square-foot computer to much-more powerful computers you can hold in the palm of your hand. Not only have computers progressed this way, the technology has migrated to every possible type of device, changing transportation, military weaponry, photography, education, communications, business, law enforcement, medicine and just about everything else you can think of.

In Steve Jobs’ great graduation speech at Stanford University in 2005, he spent a good amount of time speaking about "connecting the dots." In Jobs’ words, "you can’t connect the dots looking forward; you can only connect them looking backwards."

In this series, my intent is to connect the dots in the life of the technology around us so we can look at where we came from and what the ramifications of the trip have been. I will also look at the elements that underlie the technology that is now part of our lives and speculate as to where that technology may take us.

The impact of the technology explosion is obvious in some areas, such as the constant geometric increase in the power of computers as described by "Moore’s Law." In other areas, such as photography, journalism and medicine, it may not be as apparent until we step back and realize that the world has "gone digital" and that this transition, in some way, impacts everything. As we step back, perhaps, we will be in awe as we note how far we have come. I hope so – and welcome aboard for the ride!

The Advance of the World Wide Web

It is hard to believe that the graphic browser has been around for less than 20 years and really did not come into common use until 1995 to 1996. In that short time, it has changed how we gather information, shop, pay bills, advertise, and keep in touch with family and friends – in short, just about everything we do.

As with most innovations, the graphic browser did not just fall out of the sky. It was the confluence of years of thought with hardware and software development. Throughout the history of scientific progress, many innovators and science fiction writers have seen things as they should be or would be long before the technology was available to implement their vision. Perhaps the most famous is Leonardo DaVinci’s drawings of submarines and flying machines – long before the technology existed to make these visions viable.

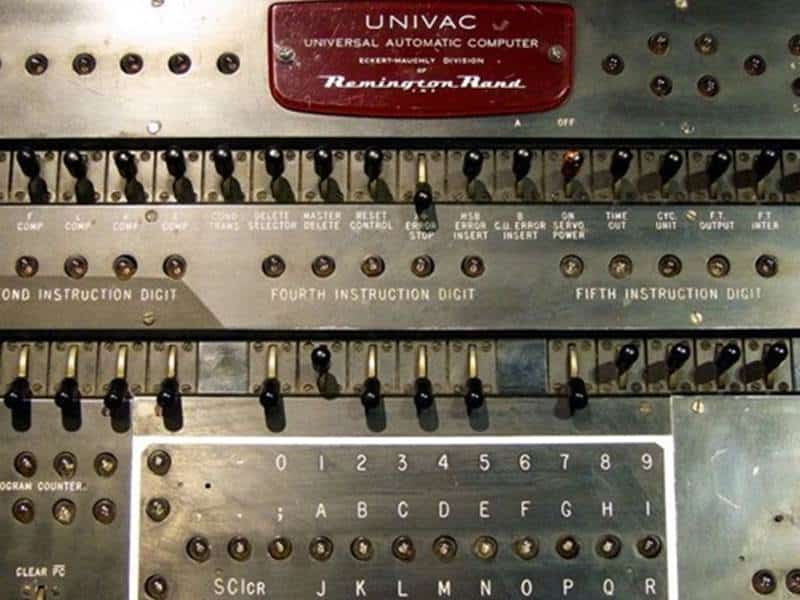

The idea that later became the World Wide Web originated as World War II was winding down. Two great discoveries came out of World War II: the atomic bomb and the first working electronic digital computer, the Electronic Numerical Integrator and Computer (ENIAC), both of which were developed under government funding.

The ENIAC development effort set the standard for major computer systems development in the future – it was late and over budget – but it was a landmark development that paved the way for all future computer development. While the reason for its development was the rapid calculation of gunnery trajectories, those involved realized that computers would have uses other than those related to the military. One of the developers, J. Presper Eckert, suposedly envisioned that 25 computers like this could satisfy all the country’s business needs through the end of the 20th century. Althoug he underestimated a tad – the iPhone4 has much more power than the ENIAC and doesn’t come close to meeting the needs of an entire business – he was right about one thing: computers were here to stay, and would become a key part of business operations.

An Idea: The World Wide Web

A more prescient view was put forth by Vannevar Bush in a July 1945 article for The Atlantic titled "As We May Think". Bush, former Dean of the MIT School of Engineering and science advisor to President Roosevelt (from which position he oversaw both the development of both the atomic bomb and ENIAC), saw computers as tools that would aid humans in research. While he had the equipment all wrong – what was needed to make the system he envisioned work was actually decades away – his idea of a computer that had access to and could retrieve all possible information that one might need became the basis for what we now know as the World Wide Web and many of its most popular tools, such as Wikipedia and Google. (Read more about the history behind the Web in The History of the Internet.)

Bush also pointed out that we think and want information in an associative manner, which is different from the linear way in which we read (start to finish, top to bottom). When reading an article or discussing a subject, our minds constantly jump. Unlike in a book, Bush envisioned a Web that could take you from information about the World Wide Web, to WWII, FDR or the atomic bomb, and to delve even deeper to learn about Eleanor Roosevelt, Japan or Alan Turing. Which, through the power of linking, is now a common way in which people explore and retrieve information.

Bush’s theories were further refined by Theodor Holm "Ted" Nelson, who, in 1964 coined the term hyertext to refer to material that went "deep" rather than "long." So, for instance, if you wanted more information about Alan Turing, as mentioned above, hypertext is what allows you to "click" Turing’s named and find out more about him. The term hypertext was eventually expanded to hypermedia as audio, graphic, and video computer files came into being.

On to Xanadu

Nelson had begun work in 1960 on a system that he called Project Xanadu to bring his ideas to fruition. (He documented his efforts and plans in a very interesting and unusual book called "Computer Lib/Dream Machine" (1974). His work continues to this day.

The GUI Emerges

Another key player in this story is Alan Kay. A computer scientist and visionary, Kay is well-known for coining the phrase, "The best way to predict the future is to invent it." As it turns out, he had a hand in inventing the future in two ways.

While at Xerox Palo Alto Research Center (Xerox PARC), Kay wrote an article in Byte Magazine in 1978 describing the "Dynabook", his vision of a computer the size of a yellow pad. Students would carry this around and, when information was needed, would obtain it from an invisible net in the sky. It sounds plausible now, but Kay’s vision came long before laptops, tablets, or an accessible Internet.

At Xerox PARC, Kay was part of a team with Adele Goldberg, Larry Tessler, and others, that developed the first object-oriented program language, SmallTalk, and then used it to develop the first graphical user interface (GUI). The GUI was used on Xerox’s Alto and Star systems but became prominent when it was licensed by Apple Computers and used on Apple’s Lisa and Macintosh systems. Apple later licensed the GUI to Microsoft.

The Push for a Network

Parallel to the GUI development was the search by British programmer and consultant Tim Berners-Lee for a system to better manage the great amount of information developed by visiting and resident scientists at the Particle Physical Laboratory in Zurich, Switzerland (abbreviated as CERN). Faced with a multitude of operating systems and word processing programs, Berners-Lee came up with a method of "tagging" information so that it might be found through a common text-based interface. The system, which Berners-Lee called the World Wide Web, was soon opened to users on the Internet who would telnet to info.cern.ch to access the gateway to information.

While the Web was very useful to scientists and educators, it required users to understand the arcane interface of the Internet, including the telnet utility, and was not something that appealed to the general public.

From Windows to the Web

Parallel to the development of the Web was Microsoft’s progress in its development of the GUI it called Windows. Microsoft’s early attempts in this area had been plain awful (due more to the limitations of its MS-DOS operating system and the poor displays available for PC-compatible machines than to poor design of the GUI interface). When Microsoft introduced Windows 3.0 and ported over GUI versions of Word, Excel and PowerPoint from the Macintosh, it seemed to have finally gotten it (mostly) right.

There was, however, an aversion to the adoption of GUIs by the "techie" types. They felt that one could do more at the command line and that Windows slowed down machines. As a result, the adoption of this technology was slow at first.

Mosaic Breaks Through, Netscape Navigator Seals the Deal

The slow adoption of both the Web and GUI interfaces changed dramatically when Marc Andreessen, a student at the University of Illinois at Urbana-Champaign, and Eric Bina, a co-worker at the university’s National Center for Supercomputing Applications (NCSA), develope Mosaic, a graphic Web browser that allowed users to use the World Wide Web through a GUI interface. Once the computing world was exposed to Mosaic, which only ran on systems with a GUI (Macintosh, Unix with an "X-Windows" interface," and MS-DOS systems running Windows 3.1.1), the demand to use GUI systems overwhelmed techie opposition and the large majority of computer users migrated to GUI interfaces.

Shortly after Andreessen graduated, he, Bina, and Jim Clark, ex-CEO of Silicon Graphics, founded Netscape Communications, which created the first truly successful commercial Web browser, Netscape Navigator.

The Early Days of the Web

Bob Metcalfe, an ex-PARCer who developed the Ethernet networking standard, writing in the August 21, 1995, issue of InfoWorld, described the early years of Web development as such:

"In the Web’s first generation, Tim Berners-Lee launched the Uniform Resource Locator (URL), Hypertext Transfer Protocol (HTTP), and HTML standards with prototype Unix-based servers and browsers. A few people noticed that the Web might be better than Gopher.

In the second generation, Marc Andreessen and Eric Bina developed NCSA Mosaic at the University of Illinois. Several million then suddenly noticed that the Web might be better than sex.

In the third generation, Andreessen and Bina left NCSA to found Netscape…"

Netscape’s Navigator Browser eventually begat Firefox, which was followed by Microsoft’s Inernet Explorer, and Google Chrome. These browsers came to dominate the market. Access to the Web became a major impetus for people to buy smartphones and tablets and, within 20 years, the Web became a major part of many people’s lives.

In the words of Billy Pilgrim, "… and so it goes."

The Rise of E-Books and Digital Publishing

In 1981, a co-author, Barbara McMullen and I, began work on a book on one of the newest technologies available to individuals, telecommunications. We developed the book using the latest technology at the time, a word processor on an Apple II, and even provided illustrations using an Apple Graphics Tablet. The use of these features allowed us to cut writing time dramatically from the previous "high-tech" tool, the typewriter. We could actually edit the document, making changes to sentences, moving paragraphs around, and inserting brand new text at various points in the manuscript – all capabilities that were not possible with typewriters.

Unfortunately, similar improvements had not yet come into the world of publishers in 1982 when we submitted the finished manuscript of "Microcomputer Communications: A Window on the World" to John Wiley and Sons. Some publishers were experimenting with programs that translate computer files into the formats required by their typesetting machines, while others were simply retyping submissions received in printed format. Our editor at Wiley would review the document, make changes that he thought corrected or improved the work, and verify the changes with us. Once the manuscript had passed final muster, a proof would be printed for final review and the book would be scheduled for production. The scheduling was not only based on the printing process but on the timing of the next Wiley catalog that would go to bookstores and distributors.

Our book finally reached production status in 1983, almost two years after we had begun the project and a year after we had first turned the manuscript over to Wiley. This did not seem to be a major problem to Wiley at the time, as it was used to this type of turnaround on projects, even those involving computer technology as new large ("mainframe") or smaller ("minicomputer") systems had long product cycles. It was, however, the kiss of death in the new personal computer reality. The book was well out-of-date before it reached the bookstores.

From Typewriter to E-Book: A Revolution in Publishing Begins

In those days, there were small bookstores scattered throughout all major cities. Most smaller towns had bookstores too, often near the town’s railroad station. The big chains that we have since come to know did not really exist; Barnes & Noble was in business, but was known primarily as a seller of new and used textbooks.

During this time, publishers were working with consultants to link Apple IIs and IBM PCs directly to typesetters to eliminate the need for rekeying books. Although this was relatively easy to accomplish from a hardware standpoint, it required either the author or the editor to enter rather arcane codes to instruct the typesetter when to break page, what to print in bold or italics, etc. What the computer/publishing world was looking for was a WYSIWYG ("What You See Is What You Get") system, a system where what the writer saw on his or her computer screen was exactly what would appear on the printed page (including graphics, multi-columns, large fonts, etc.).

The production problem was solved with the advent of the Apple LaserWriter, the concurrent availability of PostScript – a Page Definition Language by Adobe that provided WYSIWG capabilities to the Macintosh when used with a printer/typesetter with a PostScript processor – and PageMaker from Aldus, a page layout program that allowed text and graphics, multicolumns, various fonts, and appearance – the features that we expect to see in a book, magazine, or newspaper. While the Apple LaserWriter was the first PostScript device, other high quality printers and typesetters soon followed; after PageMaker came Quark Express, and both were ported to the IBM PC when Windows 3 became commonplace on that platform. (To learn more about Apple’s background, check out Creating the iWorld: A History of Apple.)

In subsequent years, all publishers began accepting manuscripts in digital format – by email or delivered on a disk or USB drive. As the methods of production changed, so did the makeup of the industry. Technological changes in billing and payment, such as electronic data interchange and electronic funds transfer, greatly reduced the need for clerical help, while improved distribution processes allowed firms to consolidate.

The distribution reductions were due to the growth of national chains of bookstores – Barnes & Noble, Borders, B. Dalton and Waldenbooks came to dominate the landscape, effectively leading to the demise of the vast majority of local bookstores. The chains had many more selections and could sell at discounted prices due to their purchasing power. The trend was accelerated when Barnes & Noble and Borders acquired B. Dalton and Waldenbooks, respectively, and built larger and larger box stores that incorporated cafes, and included music and children’s sections.

In another development, books on tape, first on cassette tape and then compact disks, became hot items, allowing "readers" to enjoy books while walking or driving.

The Reading Public Takes Notice

The above changes, all spawned one way or another by technology, all went generally unnoticed by the reading public to this point. This changed in 1995 with the emergence of Amazon.com as a major online bookstore. Amazon allowed customers to shop from home and at the office, wherever a computer was available, while providing a huge inventory, reduced prices and in most cases, the absence of taxes. Amazon.com provided the final death knell to the local bookstore, which could neither compete with Barnes & Noble’s ambiance nor Amazon’s convenience and low prices.

With the digital revolution in full swing, the next step was the electronic book (e-book), which is aiming at replacing the printed book. There had been e-book readers around for a number of years, but they had had little success due to the limited inventory of books available in this format. A rather clunky method of getting books to the reader (e-books would be found on line via a PC connection, downloaded to the PC and, then transferred to the reader via a USB connection) also affected their popularity. This all changed in November 2007, when Amazon.com introduced the Kindle, a lightweight device that could download e-books directly from Amazon via a wireless connection. Kindle revolutionized the industry, and by July 2010, Amazon was selling more e-books than hardcover books, and had introduced a number of Kindle models. Amazon also released Kindle apps for the iPhone and iPad, as well as the Macintosh, and Windows operating systems, making it possible for users to purchase and read e-books on a broad range of devices, and to share e-books between these devices without having to download the same book multiple times. Barnes & Noble also introduced its version of an e-book reader, the NOOK, in 2009. This device allows direct downloads from Barnes & Noble’s inventory. The company, in turn, began to divert its focus from big-box stores to becoming a provider of electronic books and devices. The rapid move to e-books by Amazon and Barnes & Noble – and the success both companies enjoyed in this area – proved too much for its prime competitor, Borders, which closed its doors in 2011.

The Emergence of Self-Publishing and Publishing on Demand

The digital revolution came back full circle to the production cycle with the advent of publishing on demand (POD) services. Throughout the history of publishing, there has been a niche called self-publishing, also referred to as vanity publishing, where an author pays a printing service to produce some number of books from a manuscript; the price of this process runs into the hundreds or thousands of dollars. Electronic interfaces refined this process, and firms sprung up to accept input from authors and prepare the necessities for the printing of books. Here the process diverges from self-publishing in that books are not printed until they are actually ordered by consumers, hence publishing on demand. The POD services offered some marketing support and editing services but the very basic plans usually cost only a few hundred dollars.

Once again, enter Amazon! Its subsidiary, CreateSpace, developed a basic POD that costs the author under $20 (with additional features available at higher costs) and the books are almost immediately available on Amazon. Customers purchase the books online through Amazon and the author receives monthly royalty checks. Major traditional publishers have also adopted the POD model for books whose initial demand has subsided. This allows the books to continue to sell, but eliminates the need for maintaining large inventories in warehouses.

The Future of Reading

There have been major changes in the last 30 years in the world of books, all of which are of benefit to consumers. However, there has been great disruption within the industry, much of which has occurred under consumers’ radar. Gone are typesetters, workers in local book stores, warehouse workers, those involved in the distribution process, many publishing house executives, editors, salespersons, and clerical workers.

But then, that’s technology. The world changes around us, and we are often forced to adapt. Sometimes, we recognize the changes as they happen. Most times we do not.

From Vinyl Records to Digital Recordings

Perhaps nowhere has the impact of technology been more obvious than in the music industry. New delivery systems have sprung up, causing once-successful businesses to fail; a major industry organization has changed course; industry-related lawsuits have worked their way up to the Supreme Court, and a computer company has become the largest music retailer in the country – and it’s all happened in the last 50 years, dramatically accelerating over the last 20. Here we take a look at this process as it occurred.

From Singles to Big-Box Record Stores

In the mid-1960s, music buyers were making the transition from "singles", vinyl records that rotated at 78 to 45 revolutions per minute (RPM) and held a single song on each side, to "albums," which spun at 33 RPM and included six to 10 songs on each side. There had been albums for a number of years, but they were generally collections of previously recorded singles, grouped by a theme such as "Love Songs by .." or "The Greatest Hits of …". In addition, record players that played albums generally cost significantly more than record players that played only singles. Players that played 33 RPM generally had settings (and an attachment for 45 RPM), allowing them to play both albums and singles. These units were called high-fidelity systems, or Hi-Fi. As the technology improved, these came to be called stereos, for their stereophonic sound.

The transition away from singles occurred as a result of a number of factors. For one, the price of a record player decreased as systems went from tube-based to transistor-based. The music-buying public, buoyed by boom times and encouraged by music radio stations, also began spending more money on records. Finally, albums were being recorded as original music with unifying themes – the most important of these, The Beatles’ "Sgt. Pepper’s Lonely Hearts Club Band" was released on June 1, 1967 – and the transition moved into high gear.

The growth in music-related sales led to the development of chains of big-box record stores, such as Tower Records, with large inventories and discounted prices. The success of these chains produced what has become a common effect in many growth industries – the eventual disappearance of many small, local record stores.

A Shift in Music Production

Another important technological innovation, the Moog Synthesizer, was introduced in June 1967. It allowed one electronic device to simulate and mix the sounds of many instruments. Diana Ross and the Supremes released the first rock song backed by a synthesizer, "Reflections", in July 1967. This influenced other well-known artists, including The Doors, The Monkees, The Rolling Stones, The Byrds, Simon & Garfunkel and Buck Owens, and marked the early stages of what would eventually become psychedelic rock.

Years later, music artists such as Herbie Hancock, Laurie Spiegel, Todd Rundgren and Trent Reznor turned to computer systems as composition tools. As a result, music-editing programs such as Digidesign became standard tools for musicians.

The Rise of MP3s and Digital Downloading

Of all the technological developments, none would have the long-range disruptive impact of the 1993 publication of the MPEG-2 Audio Layer III (MP3) standard for digital audio compression for the transfer and playback of music. The first application of this standard was simply the copying of music from CD to computers but in 1994, MP3s began to appear on the Internet, allowing music to widely shared through email and Internet Relay Chat (IRC). This ability became of immediate cause for concern to the Recording Industry Association of America (RIAA), an industry group whose members owned many of the copyrighted works being shared via MP3 file transfers. (Learn about the RIAA’s most recent move against illegal downloading in SOPA and the Internet: Copyright Freedom or Uncivil War?)

The issue of copyrighted file sharing came to a head (the first of many such turning points) when Diamond Multimedia announced the Rio PMP300, a portable MP3 player, in September 1998. The following month, RIAA filed an application in the Central District Court of California for a temporary restraining order to prevent the sale of the Rio player, claiming the player violated the 1992 Home Recording Act. The court ruled against RIAA’s application, 200,000 Rio players were sold.

Napster Brings MP3s to the Masses

In spite of the RIAA’s concern, at this point the distribution of copyrighted music was limited mainly to the small number of Internet savvy people who were able to use IRC. This situation changed dramatically in June 1999 when Shawn Fanning, a student at Northeastern University in Boston, introduced Napster, a system that allowed users to exchange music over the Internet. Fanning’s system did not require that the music be stored on a central server. It simply allowed users to find out which other users had songs of interest and then download the songs directly from those users. This approach left it rather fuzzy as to whether Napster was itself violating any laws.

Some clarity was brought to the situation when heavy metal band Metallica teamed up with rapper Dr. Dre to sue Napster for copyright violation. This action began a series of legal challenges that led to the eventual demise of Napster. In the meantime, however, many other file-sharing systems such as Limewire, Kazaa, Freenet and Gnutella appeared on the scene. Even at this early stage in Internet file-sharing’s history, it was already clear that a major shift was occurring in the way people enjoyed music. What was also clear was that recording artists were fighting an ever-increasing battle to prevent their music from being distributed for free.

In 2001, Apple Computer introduced iTunes, both for Macintosh and Windows computers. This system provided a way for people to download, play and organize digital music. Although it was not obvious at the time, this was the first step in a succession that allowed Apple to become the largest retailer of music in the United States. The next step was Apple’s introduction of the iPod that same year. It rapidly became the dominant MP3 player in the world.

The last nail in the coffin of the way things were in the music industry occurred in April 2003 when Apple introduced the iTunes Store, an online music store that allowed the legal purchase and download of music to an iPod (and later the iPhone and iPad). To set up the online store, Apple CEO Steve Jobs had to convince the music companies that it was in their best interests to provide their music to iTunes – and that it was better to sell their songs at a low price (99 cents) than to allow them to be stolen online. Music industry analysts did not believe that the music companies would agree to such arrangements – but they did – and Apple’s initial projection was that it would sell 1 million songs in six months. The iTunes Store sold that first 1 million songs in a mere six days, and Jobs was quoted as saying "This will go down in history as a turning point for the music industry." Which, as we know, it did. (To read more about Apple, check out Creating the iWorld: A History of Apple.)

The New World

Jobs was right. The deterioration of the previous incarnation of the record industry deteriorated quickly. Tower Records went out of business in 2007; Apple became the largest retailer of music in the US, surpassing Walmart and Amazon. Every song purchased through the iTunes Store should remind us that there is no longer a need for these songs to be transcribed, packaged and transported to a retail outlet.

From Snail-Mail to Email

The United States Postal Service was so crucial to the functioning and development of the United States that it was a department of the federal government for most of its first 200 years of operation. But the use and importance of physical mail in a day and age when most messages can be sent electronically has drastically changed the communication landscape, providing a testament to the disruption that technological advances can impose. Here we take a look at the history of the postal service in the United States and how the emergence of technology has impacted this pivotal institution.

An Essential American Service Emerges

The official history of the postal service in the United States actually predates the Declaration of Independence with the appointment of Benjamin Franklin by the Second Continental Congress as Postmaster General in 1775. This appointment carried on a tradition of government-involved mail service.

The first Postmaster General appointed under the Constitution, Samuel Osgood, was appointed in 1789. Postmaster became a Cabinet-level position in 1792. From that date forward, there has been constant expansion of the role of the post office in the quest to provide mail service to all citizens of the United States.

1847 brought stamps to the service, and prepayment of postage was made mandatory in 1855. The service at that time required senders and recipients to send and pick up mail at their local post offices (something many of us still do). In 1863, free delivery began in large cities. At the same time, uniform postage rates based on the size and type of mail were put into use.

In the same time period, 1860-1861, the Post Office instituted the Pony Express, a fast mail service that carried mail across the Western United States by horseback. Although the Pony Express became surplus when the transcontinental telegraph and railroad systems came into use, their institution showed the federal government’s commitment to timely mail delivery throughout the United States. The use of the rail system began in 1862 on an experimental basis and became universal with railroad station post offices in 1864. Airmail was introduced in 1918.

Postmaster John Wanamaker pushed for the extension of free delivery into rural areas and, prior to his leaving office in 1893, funds were allocated for such an experiment. The post office had the right to reject any delivery route that it considered improperly paved, too narrow, or otherwise unsafe. It is estimated that between 1897 and 1908, local governments spent an estimated $72 million on bridges, culverts and other improvements to provide for Rural Free Delivery (RFD) service. RFD became permanent and nationwide in 1902. It is still considered by many to be the most important governmental action in bringing together the rural and urban portions of the United States until the introduction of the Interstate Highway System in the 1950s.

In 1970, the Postal Reorganization Act was signed by President Nixon, resulting in the spin-off of the Cabinet-level post office department into the independent United States Postal Service (USPS). The act, which took effect on July 1, 1971, stipulated that the "USPS is legally obligated to serve all Americans, regardless of geography, at uniform price and quality."

From Glory Days to the New Normal

In 2011, the USPS and its 574,000 employees was the second-largest civilian employer in the U.S.(behind Walmart) and, with more than 218,000 vehicles, operates the largest vehicle fleet in the world. But times have changed, and the organization is coming under extreme pressure to reduce costs and operate as a profitable enterprise.

What are the reasons for the decline of the postal service, in economic viability, in volume of mail processed and in the public’s appreciation of its role? There are a number of factors. For one, it carries a heavy financial requirement imposed by Congress as part of the Postal Accountability and Enhancement Act of 2006 (PAEA). This was enacted on December 20, 2006, and it obligates the USPS to pre-fund 75-years’ worth of future health care benefit payments to retirees within a 10-year time span. In an age when most pensions are being reduced or eliminated, it’s a heavy burden to bear.

Another economic factor has been the upward movement of the price of gas. With the largest vehicle fleet in the world, every penny increase in the national average price of gasoline, means an additional $8 million per year to fuel its fleet.

In addition, citizens’ view of the role of the USPS is changing. In a January 2012 column for Jewish World Review, conservative economist Thomas Sowell wrote:

"If people who decide to live in remote areas don’t pay the costs that their decision imposes on the Postal Service, electric utilities and others, why should other people be forced to pay those costs?".

As the country becomes increasingly urbanized, providing expensive services to those "off the grid" is becoming more controversial. And while this view is certainly not without merit, it’s a far cry from the government mandate that the USPS serve all Americans, regardless of geography.

Take the Snail Out of Mail

The prime reason, however, is the technological changes that have occurred. It all began with the advent of the fax machine, but it’s the widespread availability of email that’s really shifted our focus away from the mail. First-class mail volume peaked in 2001 with a volume of 103,656 million pieces ,and has declined 29% from 1998 to 2008. (Learn the history behind the emergence of email in A Timeline of the Development of the Internet and the World Wide Web.)

The Advanced Research Projects Agency Network (ARPANET), the precursor of today’s Internet, came online in 1969 as a way of exchanging information between scientists. In 1971, Ray Tomlinson was credited for both deciding that the "@" sign would separate specific recipients from the place at which the recipient’s electronic mailbox was maintained and for sending the first email.

Although there came to be a number of formats developed as email systems developed for internal corporate systems and other networks, Tomlinson’s standards were adopted throughout the ARPANET. As other networks and services were folded into the greater Internet, those standards became universal.

Soon features were added to email to allow the inclusion of graphics and complete files as attachments. Further, as email proliferated in the business world and the security of mail became a real concern, add-ons such as digital signature and encryption came into existence. Web mail services that used secure servers (https), such as Hotmail, Yahoo Mail and Gmail were also introduced.

It is estimated by the Radicati Group, a computer and telecommunications market research firm, that 294 billion email messages were sent per day in 2010, up from 247 billion in 2009. That adds up to 2.8 million emails every second, and some 90 trillion emails per year.

Obviously, not every one of these emails replaces a first-class letter. In fact, Radicati also estimated that 90 percent of those 90 trillion emails were spam or included malware. Nevertheless, that still leaves 9 trillion emails per year, well over the 78,203 million pieces of "snail mail" that passed through the USPS in the same year. (Learn about some of the most common types of malware in Malicious Software: Worms, Trojans and Bots, Oh My!)

Return to Sender?

The demise in public image of the USPS has been compounded further by the fact that as revenue from volume has decreased, it raised the price of stamps to try to maintain its revenue levels. This is the opposite of what generally happens in business, where prices tend to decrease along with demand, and services are cut. Because the USPS is hindered by regulation and labor contracts, it faces an uphill battle to become a profitable enterprise.

But there’s little doubt that there will be service changes and cuts, personnel layoff-offs and facility closures. After growing to meet the needs of the United States, the Postal Service now represents a bygone era, one in which the instant gratification for digital communication was not yet an option. And while the mail has hardly been rendered obsolete, its decline is a reminder of the disruption attendant to technological innovation and the ramifications of change.

The Evolving World of Photography

On January 19, 2012, Eastman Kodak filed for bankruptcy protection under Chapter 11. As the firm that brought photographic ability to the masses, this blow represented a turning point in technology and the industry.

Kodak’s story is not unfamiliar in the world of technology. Kodak’s innovation started an industry, educated and developed a huge customer base, invented digital photography, and made the mistake of not staying ahead of the technological curve, eventually falling victim to the major management error that led to its bankruptcy. Its story is not dissimilar to Xerox’s which also begat an industry and, by in large, because of its great success, did not make the leap into the area of computer technology even though its major research arm, Xerox Palo Alto Research Center (Xerox PARC) gave us laser printing, object-oriented programming the graphical user interface and Ethernet. Kodak’s story differs in that it spans a much longer period of success. Here we’ll take a look at the history of photography and how technology has changed its course.

A Snapshot of Photographic History

Photography did not begin with Eastman Kodak. The idea of a pinhole camera dates to the fifth and sixth centuries. Even before that, Leonardo da Vinci wrote about a "camera obscura" during the period from 1478 to 1519. It was not until 1839, however, that the term "photography" entered the English language. During the 19th century, photographers had to understand the physics of light and have the skill to use heavy photographic equipment.

George Eastman (1884 – 1932), the founder of what became Eastman Kodak, is truly the father of modern photography. He invented roll film as a method of recording photographic images; in 1888, he introduced the Kodak Camera, the first camera for roll film. It was a camera for the masses that cost $25 and came preloaded with enough film for 100 exposures. When the customer had used all the exposures, he or she sent the camera containing the film to Kodak, which developed the film, printed pictures, reloaded the camera with film for another 100 photos, and mailed it all back to the customer – all for $10.

In 1892, the firm known as Eastman Kodak was born. In 1900, the company introduced the camera that would become its most famous model, the "Brownie." My first experiences with photography were with a Brownie Box camera, which would be used on trips to the Bronx Zoo with my male and female cohorts in their Easter finery. The Brownie had no zoom or wide angle features and the film and the pictures were black and white. Eastman Kodak dominated the film market for decades. In 1976, it had a 90% share) and, in spite of what now feels like Stone Age technology, the public was generally satisfied with the results.

Eastman Kodak was added to the Dow Jones Industrial Index in 1930 and was included there for 74 years until it wad finally removed in 2004.

Even after Eastman committed suicide in 1932, Kodak continued to innovate and prosper. It introduced Kodachrome, the first 35 mm color film in 1935, and faced its first major technology challenge in in 1948 when Polaroid introduced the first instant film camera. Kodak tried to compete with Polaroid by introducing its own instant camera but was not very successful and, after it lost a patent infringement suit to Polaroid, left the instant camera business in 1986.

The Advent of Digital Cameras

As we now know, the Polaroid was just a hint of the instant gratification we would come to expect from photography. But it wasn’t until the invention of the digital camera by a Kodak engineer, Steven Sanson, in 1975, that Kodak really delivered a better answer to consumers’ desire to see their photos right away. Unfortunately, Kodak decided not to actively move to this technology because they believed it would cannibalize their booming business in selling film. Initially, the judgment made some sense as digital cameras were very expensive and did not have the resolution that film cameras did. This, of course, would soon change, and it was Kodak’s short-term thinking that would ultimately leave the company far behind its competitors.

Once the digital camera cat was out of the bag, digital cameras became smaller and cheaper, the resolution improved, and users moved rapidly to the new format. As photography became increasingly computerized, new competitors entered the market as computer and electronics firms such as Hewlett-Packard, Samsung, and Sony joined Nikon, Canon and, eventually, Kodak in producing digital cameras.

The shift to digital photography didn’t exactly take Kodak by surprise. The company saw the writing on the wall; by 1979 it had determined that the market would shift permanently to digital by 2010 and it tried to do other things to broaden its base. This included a move into copy machines, threatening Xerox. What it did not do, however, was to thoroughly embrace digital photography and begin to wind down its investment in film research, processing and development.

From Cameras to Devices

But the changes in the photography market did not end with the advent of digital photography. In fact they’d only just begun. Few people carried a digital camera around with them everywhere they went, but by the late 1990s, many people were carrying another device: a cellphone. As these cellphones became smartphones, manufacturers began to add digital and video camera capabilities to the devices. While this hasn’t killed the market for digital cameras, it has affected it and will likely continue to have an impact as the quality of the cameras built into smartphones improves.

More importantly, from a societal view, we now have a nation of photojournalists who increasingly provide the public with images of natural disasters, police brutality, sports events, criminal activity, accidents, weddings, unusual weather, graduations, etc., largely because they have the ability to do so at their fingertips. These pictures may be shared instantly via email or, increasingly Social media. This immediacy and ability to share has in some ways made photography an even more powerful medium.

Technological Innovation, Creative Disruption

We never again have to buy film, bring the exposed film to a store or send it away to be processed, or pay for additional prints. This has not only changed the photography industry, but it has also affected the way take, view and share photography. In their desire to continue to profit from film, the Kodak company overlooked a key factor of technological change: once consumers move toward a new way of doing things, there’s no turning back.

The Emergence of the Internet

In the course of this series, I’ve written about email, the World Wide Web and iTunes – all innovations made possible because of the existence of the Internet. Although many people, particularly those new to telecommunications, think that the terms "Internet" and "World Wide Web" are synonymous, they are as synonymous as Italy and Rome. The Internet, in its current form, went live in 1969 – the World Wide Web didn’t arrive on the scene until 1993. Here we’ll look at the history behind the Internet. But as we already know, it changed everything. (To learn more, read What is the difference between the Internet and the World Wide Web?)

From Industrialization to the Internet

The Internet was an outgrowth of a huge culture shock that occurred in the United States in the last half of the 20th century: the launch of Soviet satellite Sputnik on October 4, 1957. The U.S. had emerged from World War II as the obvious world leader in manufacturing, science and technology. Its factories, once ramped up, had out-produced every country in the world in the weaponry needed to win World War II. The planes, tanks and warships rolled off the assembly lines in a seeming non-ending parade. When the war ended, that production power was turned to televisions, cars, radios, kitchen appliances, and other consumer products, while some other countries in the conflict were forced to turn to rebuilding bombed-out cities and factories.

On the science side, the U.S. developed the first (and, for a good while, the only) atomic bomb. This is what brought an end to the Pacific portion of WWII. The big development on the technology side was the Electronic Numerical Integrator and Computer (ENIAC), the first working electronic computer developed with government funding. ENIAC was developed at the University of Pennsylvania’s Moore School of Engineering by a team headed by John Mauchley and J. Presper Eckert. In actuality, the computer was finished too late to be of use in the war effort, thereby setting a precedent for all future major IT projects, 50 percent of which are still estimated to come in late and over budget.

With the great success in the war, a weary United States turned its attention to consumer projects that would bring comfort to returning veterans and others who had contributed to the war effort – new homes, larger and plusher automobiles, kitchen appliances, and the new technology capturing America, television. While this was going on, the leader of the Communist world, the Soviet Union, was concentrating on any science and technology that could narrow the gap between it and its largest rival, the United States.

This competition had actually begun during the war with the theft of atomic secrets from the Los Alamos development laboratory by possibly well-meaning but misguided scientists who believed that the Soviet Union’s centrally-planned system was the path to a more peaceful and fair world. As the competition progressed, most saw the Soviet-produced competitive devices as "clunky", inferior technology and laughed at claims that its scientists had actually invented television and other new technologies. Then, in October 1957, the Soviet Union launched Sputnik, the first artificial satellite to be put into the earth’s orbit. It was a major technological feat at the time, but more than that, it was a statement.

Sputnik Launches

The reaction by the United States was swift. On February 7, 1958, it announced the formation of the Advanced Research Projects Agency (ARPA). Although the initial focus of ARPA had been on space-related projects, it moved into computer communications with the appointment of J.C.R. Licklider as head of the Behavioral Sciences and Command and Control programs in 1963. The previous year, Licklider, then with Bolt, Beranek and Newman (BBN), had written a memorandum outlining his concept of an Intergalactic Computer Network. This memo is considered by many to be the basis of what became to be known as the Internet. (Read a complete history in The History of the Internet Tutorial.)

In his five-year term with ARPA, Licklider was able to convince ARPA directors Ivan Sutherland and Bob Taylor of the importance of developing such a network and in April 1969, APRA awarded a contract to BBN to build the network that became known as the ARPANET. The initial ARPANET connected four locations: the University of California, Los Angeles; Stanford Research Institute;the University of California, Santa Barbara and the University of Utah. The first message between systems was sent on October 29, 1969, and the entire network went live on December 5, 1969.

In the 1960s Paul Baran at the Rand Corporation and Donald Davies at the National Physical Laboratory in the U.K. independently came up with the concept of packet switching, a technology that broke up communications messages (of any type) into small packets, sending each of them along the best possible route at the moment of sending, and putting them back together to deliver the message to the recipient. This methodology has the advantage of routing around problems, unlike the standard for voice communications, circuit switching, which depends on all lines in the circuit staying up until the transmission is complete.

Internet Infrastructure Emerges

In 1973, Vint Cerf and Bob Kahn included packet switching in specifications for Transmission Control Protocol (TCP). It was paired with Internet Protocol (IP), and TCP/IP became the standard for the Internet. It is still the standard to this day.

It is important to understand that TCP/IP is simply the rule for sending any information through the now vast network that we call the Internet. It does not define the functions, use, or logic of the data. The computers, devices and communication lines (and, now, wireless connections), along with TCP/IP, make up the infrastructure of the Internet, just as the roads, bridges and tunnels make up the infrastructure of a city. The rules for a city’s infrastructure are rather simple – stay to the right and stop at red lights – but the infrastructure itself will have its own rules, or standards. There are separate rules for passenger cars, trucks, motorcycles, taxis, buses, bicycles and pedestrians, and these standards are developed as new types of vehicles come into use.

Similarly, as innovators think of new types of Internet use, new standards are developed. Over the years, we have seen the Internet grow from a domestic system to exchange information between scientists to a world-wide system that is used for email, file transfers, the World Wide Web, instant messaging, social networks, cloud computing and whatever else some innovator somewhere cooks up before you even read this.

Small Time Frame, Big Changes

It is hard to believe that the Internet is less than 50 years old and that the World Wide Web did not come into common use until the mid to late 1990s – hard because of the dramatic changes this technology has brought to both the overall economy and our everyday lives.

While the Internet did not bring foreign outsourcing (or offshoring) into existence – American companies had sales and manufacturing facilities in foreign countries for decades – it did facilitate the great expansion of the practice. Previous to email and file transfer, it was difficult and/or expensive to constantly coordinate activities with foreign offices. Suddenly, it became easier and businesses reacted. Soon, help desks followed manufacturing offshore to Jamaica, Ireland, India, the Philippines and former Soviet Republics.

Previously, engineers and computer scientists from other parts of the globe had to relocate to the United States to make significant incomes. Now they could stay home and more and more businesses offshored development to India and China.

Furthermore, once the World Wide Web turned into the "killer app" that caused computers to become a staple of homes as well as offices, firms were able to convert customers into components of their business networks by paying their bills, downloading books and music, sending mail, doing research, booking travel and looking for housing online. Many of these activities were previously done by people, such retail clerks, bank tellers, real estate agents, data entry personnel, librarians, travel agents, professors, book sellers, mail deliverers, etc.

Finally, the advent of mobile apps has given us the ability to perform all of these activities on Smartphones where we are and when we want to.

Technology That Changed the World

Once again, technological innovation has provided many of us with great benefits – and some negative disruptions. The Internet has changed the way we do so many things, from the way we work to the way we communicate and form relationships with others. That isn’t to say that all the changes have been positive for everyone, but innovation often means disruption, leaving most of us with only one choice: bend to the change or be broken by it.

Technology and Manufacturing

Since time immemorial, mankind has attempted to develop tools that would allow it to do more and more with less effort – to help in performing tasks that either require great strength or were dangerous. The first success in this area – or the first that we know of – was the wheel, which was invented somewhere between 5,500 to 7,500 years ago. Once that bit of technology got rolling, progress was rapid in finding uses for it – and we were on our way. Here we look at technology in manufacturing and how the latest advances in this area are changing the world.

From Man to Machine

Progress took a relatively long time and, although sailing shops, guns, and other weapons of war were developed through the centuries, technology did not greatly impact the making of things or "manufacturing" until the infamous Industrial Revolution, which began in England around 1750. This "revolution" began either replacing human labor with that of a machine or implementing machinery that would allow for the replacement of skilled workers with unskilled ones.

While there had always been a fascination with large, strong figures doing the work of humans (think Mary Shelley’s 1818 classic novel "Frankenstein"), when machines began replacing human workers, workers understandably pushed back, and protests erupted. The best-known may be the smashing of knitting machines by an Anstey, England, weaver named Edward Ludlam, or "Ned Ludd" in 1799. The story spread and whenever frames were sabotaged, people would say "Ned Ludd did it." By 1812, an organized group of frame-breakers became known as "Luddites," a name that is still applied to anyone who opposes technology and the change it brings.

The Luddite movement did not come to the United States, as such, but the mechanization of textile, watch-making, steel production, and, later, the auto industry, led to the formation of labor unions.

Assembly Line Required

The next important technological development was the introduction of the assembly line by Henry Ford in 1908. No longer did a worker have to know how to make an automobile, only how to install a particular part. Although economist Adam Smith had written of the distribution of labor in his famous "The Wealth of Nations" in 1776, the concept had not taken hold in a large-scale way until Ford. Ford, a master of marketing, saw that by mass-producing autos, he could hold costs down and, by also providing high wages to his workers, he could empower them to buy his Model Ts, thus increasing market demand for the cars.

Ford’s assembly-line techniques soon caught on through all forms of manufacturing, although firms soon realized that one of the benefits of the process was that many of the workers on the line did not have to be paid as skilled workers. As movement from the farms to industry accelerated, union membership continued to grow.

Newer and more efficient machines began to further reduce the need for human workers. In 1961, robots were introduced to manufacturing. The Unimate, a programmable robotic arm developed by George Devol was installed in a General Motors plant in Trenton, NJ, in 1961. It is considered the beginning of modern industrial robotics. This set in motion the elimination of human jobs in manufacturing. As a result, a major part of management/labor negotiations from then on would revolve around the retention of jobs as mechanization continued.

As if industrial workers didn’t feel squeezed enough by automation during the 1970s, ’80s, and ’90s, the rise of the global economy, largely driven by the telecommunications revolution, brought with it offshoring. Faced with heavy global competition, American firms began to take the benefits of foreign advanced automation and/or much lower labor costs, forcing whole industries overseas.

The Growth of Nanotechnology

Another technological development of much greater impact is lurking around the corner. You might be surprised to discover that it’s nanotechnology, which involved machines the size of molecules.

In December 1959, soon-to-be Nobel laureate in physics, Richard Feynman, gave a talk at the California Institute of Technology in which he postulated that atoms at the molecular level could be manipulated. This concept went largely unnoticed until the 1986 publication of K. Eric Drexler’s book, "Engines of Creation: The Coming Era of Nanotechnology." In the book, Drexler projects the ability of a natotech assembler, which would be able to manipulate atoms to create or manufacture desired products.

How might this work? Suppose that a person needs a pair of jeans and decides to buy them from the Levi’s website by entering his or her measurements and the color, cut and fabric of the jeans. The jeans could then be produced by the purchaser’s own assembler, which could then be used to manufacture a new toaster, coat or end table.

If you think this sounds space-aged – and far fetched – you may be right, but think about how Web pages are shown on your computer screen. The Web browser receives data and the codes (HTML tags) that tell the browser how to display the data. If you think about it this way, the notion of an assembler seems much closer to the realm of possiblity. After all, it’s just like the Web browser; it receives the coding that describes exactly what is to be produced and any necessary molecules – and produces it!

Currently, Levi Strauss manufactures outside of the United States, and transports its jeans to the U.S. on a boat. The jeans are then taken by truck to either warehouses for the fulfillment of online orders or to retail sales outlets. If an assembler ever were perfected, it would eliminate nearly every job in that chain!

If this sounds way out-in-the-future – and it probably is – consider that a similar result is currently obtained through a different technology, 3-D copying, through which items such as bicycle frames and circuit boards are manufactured in a similar process to that of duplicating a memo.

Once the 3-D copying concept is digested, it’s still a rudimentary technology next to the assembler. At the same time, it is also an interim step. (Check out this video from the New York Times showing how a 3-D scanner works.)

Manufacturing of the Future

So what will happen if technological change removes the need for human workers altogether? Rest assured that a move in this direction would not be overlooked by the working public. That said, as technology changes, society also learns to adapt. From the weel to the assembler, and so it goes.

Computers in Education

Since the beginning of verbal communication, it has been the role of one generation to pass the knowledge it has acquired down to the next generation, so that the learned material does not have to be relearned all over again. Over time, passing on this information has evolved and pushed a constant striving for new ways of distributing information. Here we’ll look at how technology has affected – and continues to shape – education.

Expanding Education’s Reach

As part of the search for new ways to impart information, teachers have always looked for ways to include students who could not be brought into a classroom. The first known occurrence of this was a 1728 advertisement in the Boston Gazette by a teacher named Caleb Phillips, who was seeking students for a course in shorthand writing with lessons to be "sent weekly". With the development of postal services in the 1800s, modern distance education continued to develop.

Soon thereafter, traditional colleges and universities got into the act. The University of London, tracing its program to 1858, claims to have been the first university to provide distance education courses. Its program, now known as the University of London International Programmes, continues to this day, providing graduate, undergraduate and diploma courses from the London School of Economics and other schools. By the end of the 19th century, the University of Chicago and Columbia University were also engaged in distance education in the United States.

The type of distance education used throughout the majority of the 20th century came in the form of "correspondence courses," in which students received either a complete course or individual lessons with homework assignments in the mail. Students would complete assignments and mail them back to the instructor. At the end of the course, students would either go to a testing center to take an examination on the honor system or simply receive credit for completing the course. Many well-known American universities had such programs and some, such as the University of Maryland, worked in conjunction with the U.S. military, which provided courses to service men throughout the world.

I took such a course while working as a civilian employee of the Department of Defense in the early 1960s. It was an introduction to data processing course that encompassed both the relatively new use of electronic computers and the older electrical accounting machines developed at the the Army’s Fort Benjamin Harrison educational facility in Indiana. While the course was comprehensive, it was not very useful to someone already working in the field. It was good reference material but the turnaround was too slow for someone who was only able to work on the material in spurts.

It was actually not my first exposure to distance education. From 1957 through 1982, CBS, in conjunction with New York University, broadcast "Sunrise Semester" at 6 a.m. Eastern Time each weekday. The courses covered a wide range of subjects and could be simply watched by any viewer or taken for actual NYU credit by signing up and paying a fee.

Distance Education Goes Digital

It soon became obvious as computers and telecommunications became more ubiquitous throughout both colleges and the business world that these systems could be used for business education as well as traditional courses. In the mid-1970s, I had occasion to use the first generalized computer assisted instruction system, Programmed Logic for Automated Teaching Operations (PLATO). PLATO was at the University of Illinois as a teaching device for its students. The subsidiary of Control Data Corp. I was working for at the time provided the machines on which the system ran. The training that I took provided introductory material and then asked a series of multiple-choice questions. If the answer provided was correct, the system went on to the next question; if incorrect, it explained the correct answer. Although rudimentary by today’s standards, PLATO generated excitement as to where computer-assisted education might go as it did provide forums, message boards, online testing, email, chat rooms, instant messaging, remote screen sharing, multiplayer games, and other components that became staples of later systems.

PLATO and other systems of that time required access to terminals connected to large mainframe systems, mandating that the large majority of users would have to be on a campus or at work in an office and that their connection had to be dedicated to the educational system to which they were connected. The reach of educational systems grew dramatically with the introduction of Because It’s There Network (BITNET) in 1981. BITNET was introduced by Ira Fuchs at City University of New York and Graydon Freeman at Yale University. Running initially only on IBM mainframe systems and later also on Digital Equipment Corporations’ VAX systems, BITNET brought together, at its high point in 1991, 3,000 nodes at almost 500 educational institutions worldwide. It provided email connections throughout the network and mailing lists (primarily through LISTSERV mailing list servers), allowing users to join lists for more than 10,000 subjects and obtain and share information.

The Internet and Higher Learning

BITNET’s popularity dipped as personal computers came into wider use and the Internet spread rapidly, bringing with it File Transfer Protocol (FTP), Gopher file searching, and, eventually, the World Wide Web. Its users were integrated seamlessly into the Internet. (Learn more about how the Web evolved in A Timeline of the Development of the Internet and World Wide Web.)

Parallel to the improvements in technology and communications was the development of new methods of online education. In the early days of computer networking, game players immersed in the world of the Dungeons and Dragons board game developed online platforms for interactive game playing called multi-user dungeons (MUDs) and then, as more uses were found for them, multi-user domains. The first MUD had been developed at the University of Essex in 1978 by Roy Trubshaw (and later by Richard Bertie) and was connected to the Internet in 1980, becoming the first Internet multiplayer on-line role-playing game.(Read more about gaming in From Friendly to Fragging: A Beginner’s Guide to Game Genres.)

Soon there were hundreds of MUDs throughout the world. Their pervasiveness led researchers at Xerox Palo Alto Research Center (PARC) to develop an object-oriented multi-user platform called a multi-user dimension object-oriented (MOO) platform. The first one, Lambda MOO, was developed by Paval Curtis in 1990 and was an instant success, attracting more than 1,000 users. Curtis provided the LambaCore, which represented the programs at the heart of Lambda MOO, to those who wanted to build their own MOOs. In 1993, Media MOO was created at MIT’s Media Lab by Amy Bruckman; Diversity University was created by University of Houston graduate sociology student Jeanne McWhorter that same year. These became the premier educational MOOs.

The benefits of the Lambda core included the ability for users to build their own educational tools and share them with others. At Diversity University, for instance, Professor Tom Danford of West Virginia Northern Community College built a science lab and taught microbiology classes; Albert Einstein College of Medecine neuroscientist Priscilla Purnick modeled a brain and taught neuroscience courses; Marist College Professor Sherry Dingman led a group of high school students in the development of the environment of the Gilgamesh legend. Many others also developed tools for lectures, slide presentations and simulations.

Online Courses Get Interactive

In 1995, I taught the first interactive online course at Marist College, a graduate capstone course in information systems, using the Diversity Universe platform. Students employed by IBM and about to complete the Marist program were transferred throughout the country as part of an IBM reorganization and, rather than have them try to find a course that would combine the elements of their Marist program, we set up an online section of the course. I "met" with students every Sunday from 11 a.m. to 2 p.m., and we completed the program, during which time the students only had to come to the campus for a final conference and paper presentation.

Although MOOs were successful in educational use, they were text-based only and faded away as the World Wide Web became more popular and Web-based course-management programs were developed for education, such as Blackboard, ANGEL, Moodle and Sakai, came into use. Each of these systems provides facilities for all of the features originally conceived as components of PLATO (forums, message boards, online testing, email, chat rooms, instant messaging, remote screen sharing, multiplayer games, etc.) as well as the ability to link to websites and include videos, graphics, word processing and presentation files. More and more, these systems are used not only for distance education courses but also to support in-classroom courses as well as "blended courses," a combination of distance and in-classroom courses.

Students and faculty, when presented with the idea of online courses, often seem to think that they will be easier than the more traditional, in-person classroom experience. I have taught both kinds of courses and I actually find online courses to be more difficult. As a professor, I miss the ability to "see" whether students are getting it and knowing when I have to go deeper into a subject – so I must go deeper in all subjects, and provide more material (web links, videos, documents, etc.) and more assessment tools (quizzes, homework, research papers, etc.). That means students have more work and require more discipline because they do not have set hours for classroom attendance and are left to their own time-management skills, which are often lacking. In fact, time-management is a major issue in online education, and is the reason why many colleges have minimum GPA requirements for taking online courses.

One enhanced educational tool came with the advent of Second Life, a graphics-based virtual reality platform, or a graphic MOO on steroids, which educational institutions such as Princeton, NYU, Marist, Monroe College, Ohio University, Emory University, MIT, USC, Purchase College, and Notre Dame flocked to. Although Second Life did not reach the commercial success to which it aspired, the educational experimentation goes on.

Another recent development is colleges’ placement of curriculum online at no charge. MIT has been at the forefront of this effort and, as professors from around the world share and use such material, the overall quality of all courses should improve.

Learning in the Future

As I look back in my relatively short involvement with education, it is obvious that there has been mind-boggling development. If we’ve learned anything from technology, it’s that it changes the way we do things, often in very unexpected ways. In education, the ability to gain access to resources from anywhere at any time has changed our notion of what a future classroom might look like. What happens in between remains to be seen. And so it goes …

The Explosion of Data

As we move through our analysis of the impact of technology on everything we do, we must note the factor that impacts industries across the board – marketing, law enforcement, manufacturing, science, education, politics, government, and on-and-on – data! Never before in history have we had so much data available to use and misuse.

The Beginning of Big Data

It has been written that if we use the variable "X" to indicate all the data developed by humans from the beginnings of time until the inauguration of Barack Obama, that "10X" has been developed since then. Although it is also estimated that more than 50 percent of that data is redundant or spam, that is still an awful lot of data! There is census data, scientific data, economic data, marketing data, credit data, sports data … data about every possible thing. And it’s constantly increasing. (The increase of data and the importance of crunching it has made data science one of the hottest new careers. Read more in Data Scientists: The New Rock Stars of the Tech World.)

It is the personal data that tends to attract our attention though. We read and perhaps worry about the amount of data that Google, Facebook and other websites have on each of us and how it may be used. Some critics of all this data collection are concerned about government surveillance; others are bothered by the collection of information about our purchasing habits by stores and credit card companies; still others think that medical data collected by doctors, hospitals and insurance companies may somehow be used against them. In short, data is a big deal!

How did this explosion of data occur? Obviously, computers were the original prime component. No longer was information simply typed to paper and kept in articles, journals and books. With computers, it was stored and could be modified, refined, and/or built upon. The advent of the Internet added another dimension by allowing data to be transported, collaborated on, and made available worldwide.

If You Build It They Will Come: More Storage, More Data

A very important factor in data collection is constant breakthroughs in storage technology that have occurred over the past few decades. Storage devices have gotten bigger in capacity, smaller in size and much less expensive. In the less than 50 years of microcomputer use, storage devices have gone from cassette tape to floppy diskette to low-capacity fixed disks to high-capacity fixed disks and the cost of storage has continued to decline.

A case in point: In 1980, I purchased my first fixed disk for a microcomputer, a 10 million-byte Corvus. It cost me $5,500. At the time of writing (2012), I have trillion-byte fixed disks. As you can see from the following table, the cost has shrunk geometrically:

| Unit | Capacity (Bytes) | Cost in 1980 | Cost Today in 1980 Prices | Actual 2012 Prices |

| 10-Million Byte Drive | 10,000,000 | $5,500 | ||

| 1 MB | 1,000,000 | $550 | ||

| 1 GB | 1,000,000,000 | $550,000 | <$1 | |

| 1 TB | 1,000,000,000,000 | $550,000,000 | <$100 |

The two terabyte disk sitting next to my MacBook cost around $200. If prices per megabyte had remained at 1980 levels, it would have cost over $1 million! In addition, I have 16 billion bytes (GB) on a chain around my neck. The 16 GB USB drive weighs about an ounce while my 10 MB Corvus drive in 1980 must have weighed 15 pounds; it was heavier than the computers it serviced.

It is important that storage capacity keeps increasing because the data universe is now doubling every two years. This doubling puts more demand not only on our storage capacity, but also on our communications channels and, most important, on the software tools that collect, extract, combine, and analyze this data.

Data Vs. Information

I have used the terms "data" and "information" rather interchangeably so far – actually they are quite different. Data is the raw material – numbers, pictures, etc., while information is data that has been shaped into a form that is understandable and useful to human beings. It is the task of the computer scientist to develop tools and algorithms that will constantly make these masses of data more useful. (Learn more about this in Big Data: How It’s Captured, Crunched and Used to Make Business Decisions.)

Data and Our Privacy

While we are generally happy (or at least not troubled) by the fact that our orthopedist’s report goes immediately via computer link to our internist, or that Amazon immediately makes us aware of a new book by a favorite author or a new accessory for our Kindle Fire, most people don’t want marketers to know about everything they do or buy online. Most of us don’t really like the idea of surveillance cameras all throughout our cities either. Even more of us chafe at the idea of being denied employment, a promotion or a mortgage because of something we posted over social media. Fewer still are comfortable with a government agency collecting and storing information about us. They can’t do that, can they??

No, the government, can’t gather such information, but as is pointed out in the very comprehensive 2005 book, "No Place to Hide", by Washington Post investigative reporter Robert O’Harrow, private firms are not restricted by law from gathering this information and putting it together – and then selling it to government agencies. (To read more, see Don’t Look Now, But Online Privacy May Be Gone for Good.)

We each have many digital identifiers: a Social Security number, credit card numbers, home and cellphone numbers, retailer bonus programs, insurance policies, auto registrations and driver’s licenses, etc. And, as facial recognition becomes more refined, these identifiers are increasingly being cross-referenced to build a demographic picture of us.

Two New York Times articles, "The Age of Big Data" by Steve Lohr and Charles Duhigg’s "How Companies Learn Your Secrets," take us inside the world of those trying to find out all about us. Lohr’s piece focuses on big data itself and the vast research efforts going on to be able to glean more quality information from it by such entities as the United Nations and major U.S. corporations. In the corporate world, just a little edge in this area could mean millions of dollars in sales, while government entities hope it can provide either better service or better surveillance.

The Duhigg piece, on the other hand, goes into one firm, Target, and its mission to find out everything possible about clients and potential clients – not only what they buy and/or return but where they live, how much money they make, when they shop and generally what’s going on in their lives. New baby? New house? Child away to college? Once you are in Target’s system, it will purchase demographic information to supplant what it already has. Duhigg writes "Target can buy data about your ethnicity, job history, the magazines you read, whether you’ve ever declared bankruptcy or gotten divorced, the year you bought (or lost) your house, where you went to college, what kinds of topics you talk about online, whether you prefer certain brands of coffee, paper towels, cereal or applesauce, your political leanings, reading habits, charitable giving and the number of cars you own." All of this information can be found somewhere and it is increasingly becoming a commodity that’s bought and sold.

What can we do about this?

The obvious first step is to realize what you are doing when you sign up for a new service of any type. How much information do you have to give to obtain the service? Is it worth it?

The next thing to consider is whether your privacy really concerns you. Some see the loss of privacy as inevitable. Computer scientist/science fiction writer David Brin, in his 1999 book, "The Transparent Society:Will Technology Force Us To Choose Between Privacy And Freedom?," sees no end to cameras and computer monitoring. As such, he wants us to be able to monitor those monitoring us. By extension, we should also be aware of everyone who has our information and what they may do with it. (Find out more about this in What You Should Know About Your Privacy Online.)

For those who are concerned and wish to do what they can to slow or curtail the use of personal data practices, one thing we can do is put pressure on corporations that gather data about us to be totally transparent as to who has access to that data.

As we educate ourselves on these issues, we might also consider the following paragraph from Lohr’s "The Age of Big Data" article: